What became clear from the discussions at Compass AI & Data Summit in Budapest and Marketing Analytics Summit in Berlin — and from what we’re seeing across our own client portfolio — is that the industry is entering a period of consolidation and realism. The conceptual fog around ‘agentic analytics’ is lifting. Companies are beginning to understand which parts of their analytical estate must become stronger, which parts must become simpler and which parts will be reshaped entirely.

Three trajectories stand out because they address the same structural limitation from different angles. They are not trends in the fashionable sense. They are structural corrections to a decade of tool-first thinking.

Our previous blog post on ‘Why Agentic Analytics Fails Without Semantics: Lessons from Compass AI & MAS Berlin‘ is great if you want more background on agentic analytics before diving into where the industry is heading.

1. Semantic layers become mandatory

A few years ago, semantic layers were something only the most disciplined teams cared about. Many organisations believed they could ‘solve’ consistency inside dashboards or by training analysts to interpret metrics the same way. However, that approach collapses immediately in an agentic environment. AI cannot negotiate meaning. It cannot ask a colleague which version of revenue is correct. It cannot guess which join path reflects the business logic rather than a historical accident.

As a result, semantic layers are becoming the equivalent of internal API contracts: versioned, governed and treated as a shared dependency across every analytical interface. We’re seeing companies move from ‘we should centralise definitions’ to ‘we cannot operate without a definition store that agents, dashboards and notebooks all consume from’. This shift mirrors what happened in software engineering when microservices emerged. Once teams began consuming services programmatically, the function signatures became sacred. Breaking the contract broke the system.

The same will be true in analytics.

A semantic layer is no longer a convenience. It is the minimum requirement for safe autonomy.

And this isn’t theory. The companies that already operate with strong semantic layers — often the ones who invested heavily in dbt, LookML, Omni or home-grown definition stores — are the ones reporting the most stable behaviour from AI assistants. Teams without such layers see agents drift, contradict dashboards, or break on edge cases that humans would instinctively catch. The difference isn’t the model. It’s the meaning.

2. Agents become smaller and more specialised

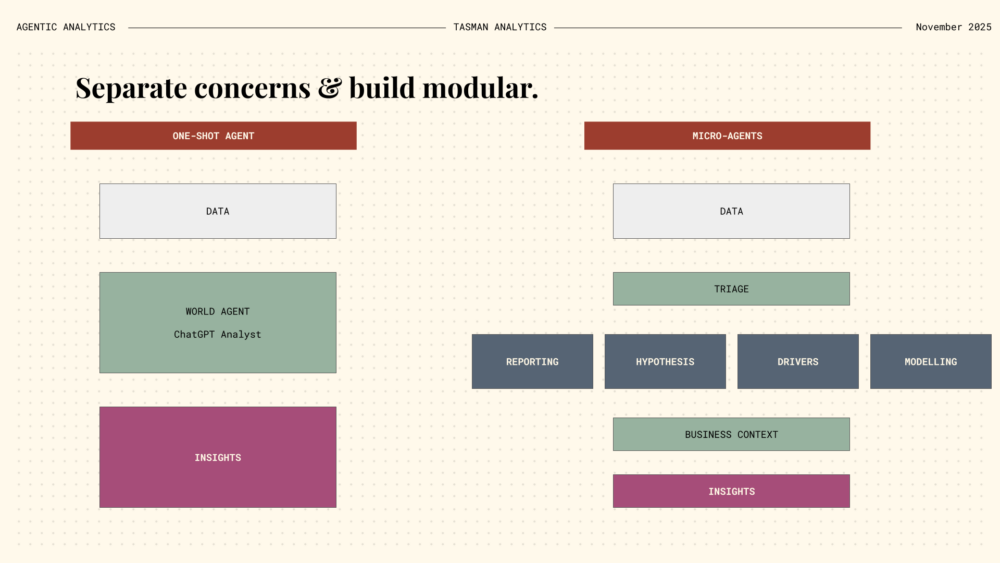

The second trajectory is architectural. The industry has experimented for a year with large, general-purpose agents designed to take a plain-English analytics question and ‘figure everything out’. Those prototypes make great demos. They rarely survive real workloads. The failure mode is always the same: too much surface area and too many failure points hidden inside a single opaque workflow.

The natural correction is towards micro-agent architectures. Each agent handles a single transformation: triage, semantic grounding, SQL generation, hypothesis evaluation, narrative construction. When an error occurs, it becomes immediately visible and can be tested systematically. This mirrors the modular design principles used in mature engineering environments. A system composed of many small, deterministic units is far easier to reason about than one composed of a single sprawling one.

We already see this shift in practice. Early adopters who initially deployed a ‘copilot’ approach are now re-architecting their agentic systems into chains of tightly scoped components — each with explicit inputs, outputs and success criteria. These micro-agents are easier to verify, easier to benchmark and easier to improve iteratively. They also allow companies to introduce autonomy gradually: first grounding, then SQL checks, then interpretation, then hypothesis testing.

The teams achieving the highest reliability now treat agentic workflows as pipelines, not personalities.

3. Culture becomes the defining competitive advantage

The most surprising trajectory — although perhaps the least surprising to practitioners — is that cultural alignment is becoming the decisive factor in whether teams gain value from autonomy. AI amplifies whatever cultural pattern already exists. If teams share definitions, reason collectively and treat metrics as common assets, agents amplify that clarity. If teams negotiate definitions, protect domain silos or improvise logic in spreadsheets, agents amplify the fragmentation.

This became a clear theme across both events.

Amy Raygada’s emphasis on organisational alignment and Lucy Nemes’ focus on analytical culture are not abstract arguments. They reflect the practical reality that agentic systems rely on shared meaning far more than dashboards ever did. Dashboards can tolerate mild ambiguity because a human interprets them. Agents cannot tolerate ambiguity at all because they execute logic directly.

We see the consequences weekly. In scale-ups where marketing, product and finance agree on definitions, an agent can answer questions about CAC, ROAS or LTV with long-term consistency. In organisations where each team ‘owns’ their own numbers, analytical trust collapses the moment automation enters the loop. Culture doesn’t just shape output quality; it shapes whether autonomy is even viable.

This is why culture is becoming a competitive advantage.

Two companies can purchase the same AI tooling. The one with alignment wins.

Agentic analytics most important implication

Agentic analytics is often described as a new analytical paradigm. It’s not. It’s something far more revealing.

It is a diagnostic.

It shows you — sometimes brutally — whether your organisation understands itself well enough to automate its own thinking. It makes misalignment visible. It exposes modelling gaps immediately. It punishes ambiguous definitions and rewards coherence. It tells you, without ceremony, whether your business logic is expressed clearly enough for a system to operate on it without human arbitration.

If your definitions are consistent, your modelling strong and your decision-making rituals stable, AI becomes an accelerant. It increases the frequency and depth of analysis. It allows teams to evaluate dozens of hypotheses per week. It gives leadership a clearer window into the mechanics of their market. And it does so without sacrificing reliability.

If those foundations are weak, AI becomes a mirror.

Not a flattering one.

It reflects the ambiguity that teams have tolerated for years. It surfaces the disagreements that once stayed hidden in notebooks. It shows where definitions differ across functions. And it provokes the question leaders have avoided for too long: Do we actually agree on what we measure, why we measure it and how the business works?

The mirror is unforgiving, but it is also clarifying.

And clarity is the first step towards capability.