The most telling part of both events we attended this month — the Compass Data & AI Summit in Budapest and the Marketing Analytics Summit in Berlin — wasn’t what people said on stage. It was the chats in the corridors between sessions, in the coffee lines and later around dinner tables. Data and AI is facing a reckoning – agentic is promising, but not many orgs have managed to scale it to production yet.

These weren’t complaints about models or latency or cost. They were admissions that a decade of tool-first decision-making had left firms unprepared for the reality of agentic analytics. When automation started interrogating the stack rather than sitting politely on the surface, the gaps became visible. What analysts had previously papered over with tacit knowledge — vague definitions, ambiguous joins, misaligned metrics — suddenly caused systems to fail in public.

This shift matters because it signals something deeper than adoption curves or hype cycles. It shows an industry moving from experimentation to accountability. And it creates a rare moment of clarity: the next wave of analytical capabilities won’t be determined by who adopts the most impressive AI tools. It will be determined by who has the strongest fundamentals, clearest semantics and most aligned cultures.

Against that backdrop, the formal presentations almost seemed like confirmation of what the hallway conversations had already made clear. The industry is entering a period where weak modelling, inconsistent semantics and misaligned culture can no longer be concealed behind polished dashboards or enthusiastic demos. Agentic systems make the weaknesses impossible to ignore. And the professionals who have to operate these systems day to day know it better than anyone.

At Tasman, we see these patterns every week in scale-ups and enterprise teams trying to make sense of their data. The ideas shared in Budapest and Berlin map almost perfectly to what plays out inside real organisations — both in the way they model meaning and the way they make decisions. This post brings those themes together and explains what the European data community is converging on right now.

A new tension: marketing wants AI, but the data still isn’t ready

The most repeated line we heard — and the one that resonated most strongly across both audiences — was simple: your marketing team wants AI, but your data isn’t ready. Their expectations are sensible: diagnose a CAC spike, explain a referral anomaly, detect a creative effect. But agents rely on semantics, not intuition. They cannot infer meaning from ambiguity.

And most stacks — even modern ones — are more ambiguous than teams realise.

A typical case looks like this. A subscription business maintains three subtly different definitions of ‘active user’ across product, growth and finance. Each definition makes sense locally. None makes sense systemically. When the AI assistant is asked to explain rising churn, it generates contradictory queries depending on which metric logic it encounters. The output changes minute to minute. Confidence collapses.

This isn’t an AI problem. It is a semantics problem. And the industry is beginning to admit this openly.

MIT’s 2025 ‘State of AI in Business’ report found that 95 per cent of enterprise GenAI pilots produced no measurable P&L impact. The finding is not surprising once you observe how inconsistent most analytical environments still are.

The problem is not capability. It is meaning.

The consensus: fundamentals first, autonomy second

Across Amsterdam, Budapest and Berlin, four themes appeared independently, yet all pointed to the same conclusion: agentic analytics requires stronger fundamentals than most organisations currently possess.

1. Data Modelling is returning as a first-class discipline

The past decade allowed many teams to get away with weak modelling. Warehouses improved. ELT tools matured. Analysts compensated manually. But machine reasoning does not cope with ambiguity in the same way humans do. Agents repeat the logic they find, not the logic the organisation implicitly agrees on.

Adrian Brudaru’s Compass talk on the LLM Data Engineer (dltHub) captured this shift clearly. Data engineers must now design for two audiences: humans and machines. And machines are far less tolerant of ambiguity.

This mirrors what we see at Tasman. Once domain models stabilise, metric definitions converge, and the semantic layer becomes authoritative, AI agents behave predictably. Until then, autonomy is a liability.

2. Culture determines whether any of this works

Culture was the quiet headline of both events. Amy Raygada of ThoughtWorks emphasised that data mesh is organisational long before it is architectural. At MAS Berlin, Lucy Nemes echoed that sentiment: analytical maturity begins with shared meaning, not with tools.

This aligns with our own field observations. Organisations where marketing, product and finance agree on definitions scale far faster than organisations that permit localised interpretations of success. Culture determines whether semantics can stabilise — and without stable semantics, autonomy collapses.

3. Verification is replacing trust as the design priority

The third shared theme was verification. Teams no longer want agents they ‘trust’. They want agents they can test. This is a profound shift. Verification replaces intuition. Observability replaces optimism. And engineering discipline replaces blind confidence.

Oleksandra Bovkun’s session for Databricks reinforced this from a platform perspective: AI workloads require OLTP-grade guarantees. Agents must operate on stable, observable, consistent structures — or they will not operate at all.

What ‘agentic analytics’ actually means when implemented correctly

The term ‘agentic analytics’ has already absorbed too many meanings, but the systems that work in practice share three traits:

1. They are built on a semantic layer

A semantic layer is not a ‘nice-to-have’. It is the contract that agents rely on to reason. It defines the entities, grains, joins and metric logic that the rest of the stack must honour. Without this contract, agents behave exactly as expected: inconsistently.

2. They use micro-agents, not monoliths

The most reliable agentic systems resemble supply chains, not supercomputers. Each agent performs one action with one definition of done. In your Compass demo, the workflow moved from triage to semantics to SQL generation to hypothesis testing. Breaking the behaviour into explicit steps makes the system testable. This is exactly how you build reliability in any complex environment: small parts, strong contracts, predictable outputs.

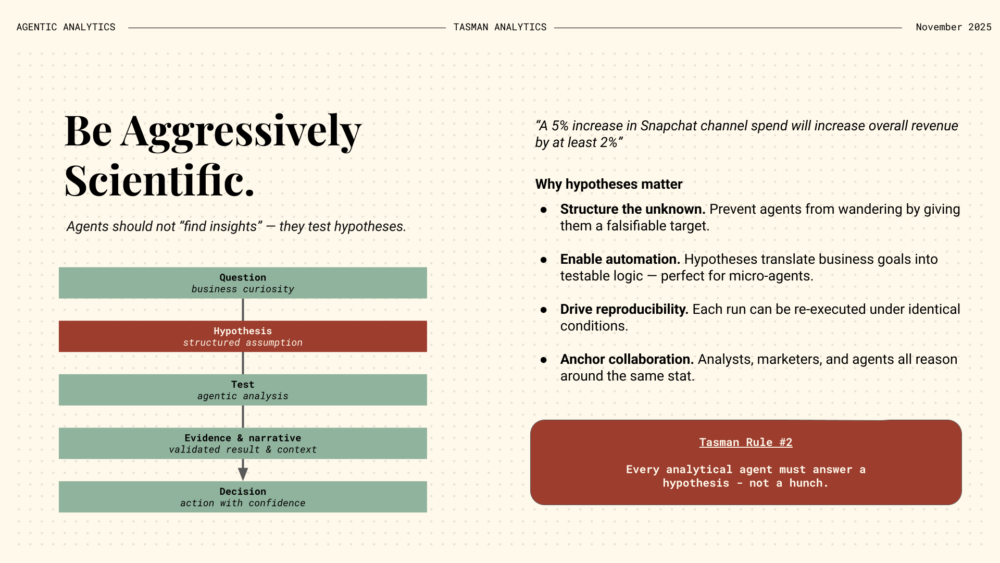

3. They answer hypotheses

The most underrated design technique in agentic analytics is simply requiring every agent to answer a hypothesis. It forces clarity. It prevents open-ended wandering. And it mirrors the way analysts already reason.

Hypothesis → test → evidence → decision.

Marketing teams naturally operate this way, which is why they are often the first to benefit from agentic workflows. When the semantic layer is strong, agents can analyse channel mix, detect anomalies and validate attribution changes with far more frequency and consistency than a human team could manage.

Why modern data stacks struggle — and why AI exposes it so quickly

The widely cited VentureBeat statistic — that 85 per cent of data projects fail — has circulated for years. (VentureBeat) But AI has changed the consequences. In an agentic system, weak modelling becomes compounding failure.

Across our portfolio, the same failure patterns appear again and again:

- stacks with dozens of tools but no enforceable modelling layer

- dashboards that break whenever a schema changes upstream

- event data collected faster than it is governed

- models optimised for dashboards, not decisions

- no alignment on what success should look like

Agentic systems cannot compensate for these problems. They multiply them.

This is why the fundamentals outlined in the ‘Beyond Warehouses’ session — critical paths, domain modelling, semantic layers — were received as essential rather than optional. The problem isn’t that the stack is incomplete. It is that the analytical environment lacks structure.

The community has moved from experimentation to accountability

One reason the mood felt different this year is that senior leaders are beginning to demand measurable impact rather than impressive demos. The MIT research forced a reckoning: autonomy does not guarantee value.

The questions leaders now ask are sharper:

- What measurable improvement did the agent produce?

- Can we reproduce the answer?

- Does the agent use the same definitions as finance?

- What errors are we logging?

- What behaviour can we test automatically?

These are not the questions of an experimental phase. They are the questions of a system expected to operate reliably. The excitement of capability is giving way to the discipline of correctness. And correctness is built, not discovered.