90% of “AI tools for data analytics” are vapourware. According to McKinsey’s 2024 research, while 65% of organizations regularly use AI, most teams are still waiting more than three days for their analyst to answer ‘did that promotion actually work?’. The gap between conference keynotes and production reality is vast. We know because we work in the messy middle—building data capabilities for ecommerce and retail clients who need answers during peak season, not eventually.

Here’s what we actually use in production client work at Tasman Analytics. Seven tools that solve real bottlenecks: testing attribution models without touching customer data, validating business hypotheses in minutes instead of days, catching tracking bugs before your CMO notices the numbers don’t add up. Every tool here has saved us—and our clients—real time and money.

These aren’t experiments. They’re working implementations that delivered during Black Friday, peak trading periods, and rapid growth phases when getting it wrong is expensive. And now we’re sharing them with you, including prompt examples! We are big fans of Claude at Tasman so we’re writing for that specific context; but feel free to adapt it to your LLM of choice.

So – let’s get to it 🙂

1. Testing Attribution Models with Synthetic Data Generation

The Problem

You can’t test attribution models on real customer data. It’s bad practice, and often privacy regulations forbid it. Public datasets don’t match your business model—your conversion patterns, basket sizes, and channel mix are unique. Building test environments manually takes weeks of data engineering work. Meanwhile, your CMO wants to see three different attribution approaches before committing budget.

What We Built

We developed a synthetic data generator that creates internally consistent customer journeys. It models the full funnel: marketing spend flows into website visits, which convert at realistic rates with proper attribution patterns. The critical bit is referential integrity—the same customer ID appears across touchpoints, just as in production data.

The technical stack uses Python with Faker for base data generation, DuckDB for local validation, and Apache Spark when clients need scale. We used ChatGPT o1 for initial architecture design, then implemented incrementally using Cursor AI. The generator produces gigabyte-scale datasets in hours.

One ecommerce client needed to test first-touch versus last-touch versus time-decay attribution before their Black Friday campaign. We generated 500,000 synthetic customer journeys matching their historical conversion rates and channel mix. They tested all three models, chose time-decay, and deployed it two weeks before peak season.

Client Impact

- Test attribution models before deploying to production data

- Run “what-if” scenarios on pricing strategies without touching real customers

- Demo solutions to stakeholders without data governance headaches

- Spin up testing environments in hours instead of weeks

The Catch

Synthetic data still requires human validation of business logic. Our initial setup draws on expertise we’ve built across dozens of clients—understanding which patterns matter and which don’t. It’s not a replacement for production testing. But it dramatically reduces the risk of deploying an attribution model that makes expensive mistakes at scale.

See our full blogpost on using Faker in Python

2. Hypothesis Testing Automation: Validating Business Assumptions in Minutes

The Problem

“Did that promotion actually work?” takes three days to answer. Every hypothesis test requires custom SQL. Your analysts become bottlenecks during peak season planning when merchandising needs rapid answers. There’s no systematic way to track what you’ve already tested, so you retest the same hypotheses across quarters.

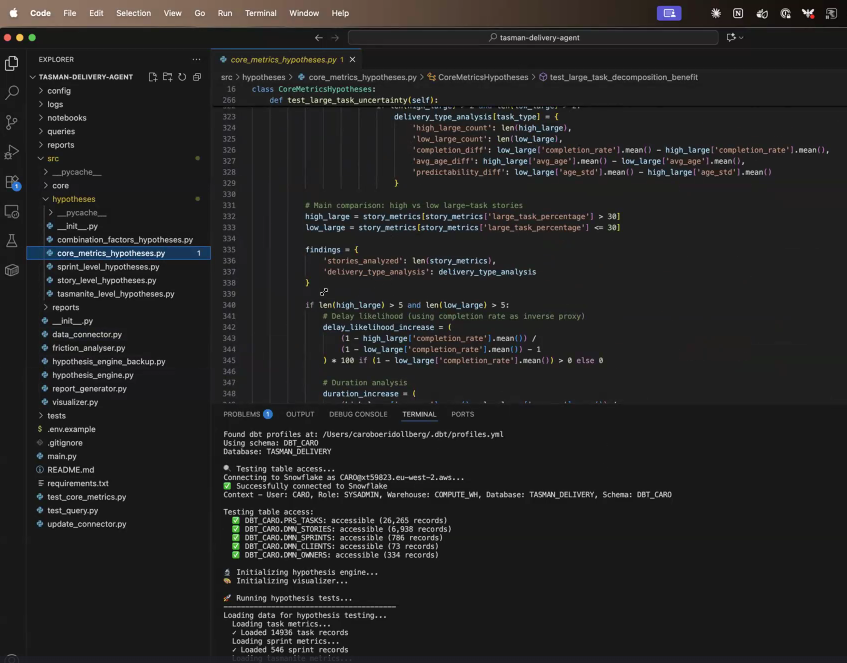

What We Built

We built a hypothesis testing tool that combines Python data processing with LLM integration for validation. The architecture has four distinct layers: a business logic layer for hypothesis definitions, a data processing engine built on pandas and scipy, an LLM validation layer that interprets results, and an HTML dashboard generator for visualisation.

The workflow starts with structured natural language hypotheses. You define them in a format like: “Small tasks are more efficient than story-level tasks” or “Customers who buy product A also buy product B more often than average.” The system translates these into statistical tests—t-tests for continuous variables, chi-square tests for categorical relationships, correlation analysis where appropriate.

The data processing engine runs the analysis using scipy.stats for statistical validation and pandas for data manipulation. It calculates p-values, confidence intervals, and effect sizes. The LLM layer then interprets these results in business context, determining whether the hypothesis is confirmed (p < 0.05 and meaningful effect size), denied (p > 0.05 or negligible effect), or partially supported (significant in some segments but not others).

The HTML dashboard generation uses matplotlib and seaborn for visualisations, wrapped in Jinja2 templates for consistent formatting. Each dashboard includes the statistical verdict, supporting evidence with specific numbers, breakdown by relevant segments, and counterexamples when hypotheses are denied.

Prompt Example

Analyse this dataset to validate the hypothesis: ‘Customers who buy sandals are more likely to buy sunglasses in the same session’

Dataset context:

– transactions table with customer_id, session_id, product_category, price, timestamp

– 2 years of transaction data, ~500K sessions

– Product categories include: sandals, sunglasses, beachwear, accessoriesRequired analysis:

1. Statistical validation using chi-square test for categorical association

2. Calculate lift (observed rate / expected rate) with 95% confidence intervals

3. Segment breakdown by price bands: <£50, £50-£100, >£100

4. Time-based analysis: does this pattern hold across seasons?

5. Identify counterexamples: sessions with sandals but no sunglassesOutput format:

– Clear verdict (Confirmed/Denied/Partial) with p-value

– Lift metric with confidence interval

– Segment-specific results in table format

– Visual breakdown recommendations (suggested chart types)

– Business implications: where should this insight apply?

Technical Implementation Notes

The system uses a configuration file to define hypothesis templates. For product affinity tests, the template specifies: baseline metric calculation (overall purchase rate), test group definition (sessions with product A), comparison logic (purchase rate of product B in test vs baseline), and segmentation dimensions (price, season, customer type).

For statistical tests, we use scipy.stats with Bonferroni correction when testing multiple segments to control for false discovery rate. The LLM layer receives structured JSON with test results and generates human-readable interpretations that non-technical stakeholders can action immediately.

Client Impact

- Test ten attribution model hypotheses in the time it used to take to test one

- Merchandising teams can self-serve basic analysis without blocking analysts

- Faster decision-making during peak season when speed matters most

- Clear documentation of what works and what doesn’t across campaigns

The Catch

Humans still frame the right questions. AI can’t tell you which hypotheses matter for your business. Results need business context to be actionable—knowing that a correlation exists doesn’t tell you whether to act on it. The system flags statistical significance, but you need domain expertise to distinguish between interesting findings and actionable insights. Not every statistically significant result justifies changing your merchandising strategy.Retry

3. AI-Assisted Development: Automated Test Generation for Faster Shipping

The Problem

Manual QA is slow and incomplete. Small teams can’t afford dedicated QA resources. Testing across browsers and devices is time-consuming. Edge cases get missed until they hit production and break something visible.

What We Use We use Cursor’s composer feature with Claude API for test generation, integrated with Selenium or Playwright for browser automation and pytest for test structure. The workflow starts with describing features in natural language, then generates comprehensive test scenarios including edge cases humans typically forget.

The technical stack is straightforward: Cursor IDE provides the AI pair programming interface, Claude generates the test logic and structure, pytest provides the testing framework, and Selenium/Playwright handle browser automation for frontend tests. For API testing, we use pytest with requests library. The key advantage is that Cursor automatically generates properly structured test files with setup, teardown, and fixtures already configured.

The system excels at covering edge cases systematically. When you describe a user registration form, it doesn’t just test the happy path—it generates tests for email format validation, password strength requirements, duplicate user handling, SQL injection attempts, XSS vulnerabilities, and boundary conditions on text fields. These are the cases that slip through manual QA because humans get tired or forget to check them consistently.

Specific Implementation Example

For one ecommerce client, we built registration form tests that needed to validate across multiple browsers and handle iframe-based payment integration. The manual QA process took approximately 4 hours per deployment cycle—testing Chrome, Firefox, Safari, and Edge, plus mobile browsers.

We described the feature to Claude Code:

Generate pytest test cases for a user registration form with:

– Email validation (format, domain checking, disposable email blocking)

– Password requirements (min 12 chars, uppercase, lowercase, number, special char)

– Duplicate user prevention (email and username uniqueness)

– Security: SQL injection, XSS attempts, CSRF token validation

– Iframe payment integration via Stripe

– Form submission with network failures

– Cross-browser compatibility (Chrome, Firefox, Safari, Edge)

– Mobile responsive validationInclude proper test structure with setup, teardown, and fixtures.

Claude then generated 47 test cases organised into logical groups, and the generated test suite ran in 23 minutes across all browsers using pytest-xdist for parallel execution. The manual QA time dropped from 4 hours to approximately 45 minutes (23 minutes runtime plus 22 minutes human review of results). That’s 81 per cent reduction in QA time per deployment cycle which is pretty significant!

Edge Cases Humans Miss

The most valuable aspect is systematic edge case coverage. Claude generated tests for scenarios our manual QA hadn’t consistently checked:

- Email addresses with plus-addressing (

user+tag@example.com)—often breaks email uniqueness validation - Maximum length inputs (what happens with a 500-character password?)

- Rapid form resubmission (double-click on submit button)

- Network timeouts during iframe payment loading

- Browser back button after partial form completion

- Copy-paste into password fields (some systems incorrectly strip whitespace)

- Autocomplete interactions with password managers.

Client Impact

- Fifty to 85 per cent reduction in test creation time

- Higher test coverage with less manual effort—went from 23 manually tested scenarios to 47 automated tests. Significant increase in analytics team efficiency.

- Fewer bugs reaching production—caught the uppercase email bug and three XSS vulnerabilities before deployment

- Faster time-to-market for new features during competitive periods—QA no longer blocks Friday deployments

The Catch

Generated tests still need human review! AI doesn’t understand business-critical versus nice-to-have features—it treats all validation equally. You still need to prioritise which tests block deployment versus which are informational.

Integration testing with real systems remains essential. These unit and integration tests validate isolated behaviour, but you need end-to-end tests with production-like data and real payment processing. We’ve found that AI-generated tests are excellent for catching technical bugs (validation logic, security issues, browser compatibility) but miss business logic errors (wrong discount calculation, incorrect inventory reservations).

The 50-85 per cent time reduction is specifically for test creation and execution. You still need roughly the same amount of time for reviewing test results, investigating failures, and validating that tests actually cover the requirements. The speedup comes from eliminating the tedious work of writing repetitive test code, not from eliminating human judgment.

4. Marketing Attribution Testing: Automated Tracking Validation

The Problem

Attribution only works if tracking works. Manual tracking audits are tedious and incomplete. Gaps get discovered when reports look wrong three weeks after launch. There’s no systematic way to validate tracking before going live, so you’re constantly firefighting data quality issues.

What We Do

AI reviews tracking documentation for gaps and cross-references specs against implementation. It identifies inconsistencies and missing coverage before client review. For one luxury retail client launching a new mobile app, we ran their tracking spec through validation. It flagged that checkout completion events were firing on button click rather than transaction confirmation—a gap that would have inflated conversion rates by roughly 15 per cent.

Client Impact

- Catch attribution problems and tracking gaps before they affect reports

- Higher confidence in attribution accuracy during campaign planning

- Fewer “why don’t these numbers match?” conversations with stakeholders

- Reduced back-and-forth delays during implementation reviews

The Catch

AI flags potential issues. Humans confirm they matter. You still need to actually fix the problems it finds. This isn’t a replacement for systematic tracking frameworks—it’s quality assurance on top of good tracking practices.

5. Sprint Review Automation for Distributed Teams (or: How To Skip Meetings Responsibly)

The Problem

Sprint reviews happen when someone can’t attend. Critical decisions get lost in meeting notes that no one reads. Knowledge silos form when people miss key discussions. Async teams struggle to stay aligned without adding more synchronous time.

What We Generate

We record sprint reviews with transcripts enabled (Zoom or Teams both work), export as .vtt or .txt, and process through Claude within 24 hours while context is still fresh. We use consistent prompt templates for better organization across sprints, which makes the summaries easier to search later.

For one client with team members across London, Amsterdam, and New York, we implemented this for their biweekly sprint reviews. The summaries became their source of truth for what was decided and why. Team members who missed meetings could catch up in five minutes rather than watching hour-long recordings. The searchable format meant anyone could find “why did we choose approach X?” months later.

How We Prompt It with Claude:

Analyse this sprint review transcript and create a structured summary with:

1. Key Decisions Made

2. Action Items (with owners)

3. Blockers Identified

4. Technical Discussions (brief summary)

5. Next Sprint PrioritiesFormat as markdown with clear sections. Focus on actionable information.

Client Impact

- Zero time spent on meeting notes

- Better knowledge retention across sprints

- Async team members stay informed without scheduling conflicts

- Clear audit trail when someone asks “why did we decide that?”

The Catch

This works best with recorded meetings. AI misses non-verbal context—tone, hesitation, body language. Summaries need human review for accuracy, particularly when decisions involve nuance. Process transcripts within 24 hours while the context is fresh in reviewers’ minds.

6. Data Pipeline Optimisation with AI Code Generation

The Problem

LLMs jump straight to code without planning. Generated code often works initially but collapses under production load. There’s no documentation by default. Tests are absent. When something breaks at 2am during peak season, no one can debug it quickly.

What We Enforce

We use Claude Code for our AI code generation with a structured methodology that forces planning before coding. The workflow has five distinct phases: architecture design, implementation plan, incremental feature building with small testable chunks, test generation after each feature, and code review where AI validates its own work.

The critical insight is breaking work into small features rather than attempting “build the whole app.” We commit to GitHub after each validated feature. This creates natural checkpoints and makes problems easier to isolate.

Test-Driven Development with AI Self-Validation

We’ve extended this approach with test-driven development where AI writes tests before implementation. This creates a self-validating loop: write tests that define expected behaviour, implement code to pass those tests, run tests to verify, then iterate. The AI can validate its own work against the test suite and self-correct when tests fail.

The workflow looks like this:

Step 1: Define expected behaviour in tests

“Write pytest tests for an inventory sync function that:

– Accepts WMS inventory data as JSON

– Validates required fields (SKU, quantity, warehouse_id)

– Handles rate limits with exponential backoff

– Returns success/failure status with error details”Step 2: Implement to pass tests

“Implement the inventory sync function to pass these tests.

Include proper error handling and logging.”Step 3: AI runs tests and self-corrects

“Run pytest. If tests fail, analyse the failure and fix the implementation.

Repeat until all tests pass.”

This creates a validation loop where the AI can catch its own mistakes. For the WMS-Shopify sync project, we generated tests for rate limit handling before implementing the backoff logic. When the initial implementation failed edge cases (simultaneous requests hitting rate limits), the AI identified the failure, diagnosed that the backoff calculation was incorrect, and corrected it—all without human intervention.

The self-validation capability is particularly valuable for data pipelines where edge cases are common but hard to predict. One data transformation pipeline had 23 different edge cases for handling malformed input (missing fields, incorrect types, out-of-range values). The AI wrote tests for each case, implemented handlers, and iteratively fixed the 7 cases that initially failed.

The Test Bloat Problem

However, TDD with AI creates a practical problem: overwhelming numbers of often redundant tests. Left unconstrained, AI generates exhaustive test suites that slow down development and CI/CD pipelines without adding proportional value.

For one sample project, the initial AI-generated test suite had 187 test cases for a data ingestion pipeline. Manual review revealed that 89 of these tests were effectively redundant—testing the same underlying logic with slightly different inputs. The tests took 14 minutes to run in CI, which blocked rapid iteration during development.

We’ve learned to constrain test generation explicitly:

Write pytest tests for [function], focusing on:

1. Core happy path (1-2 tests maximum)

2. Critical edge cases only (not exhaustive)

3. Error conditions that would cause production failures

4. Integration points with external systemsAvoid:

– Testing library functionality (don’t test that pytest works)

– Redundant parameterized tests (group similar cases)

– Testing obvious behaviour (don’t test that 2+2=4)

– Excessive mocking (prefer real integration where safe)Aim for 5-10 tests total. Quality over quantity.

This reduced the test suite for that ingestion pipeline from 187 tests to 31 focused tests. Runtime dropped from 14 minutes to 3 minutes. More importantly, the tests were actually useful—each test caught a distinct failure mode rather than validating the same logic repeatedly.

The guideline we follow: each test should catch a failure that the other tests wouldn’t catch. If removing a test doesn’t reduce coverage of distinct failure modes, the test is redundant.

Client Impact

- Higher quality code from the start—fewer production bugs from missing edge cases

- Easier maintenance and handover to internal teams—tests document expected behaviour

- Fewer production incidents during peak season—edge cases caught before deployment

- Better documentation by default when team members change—test suite serves as specification

- Self-correction reduces iteration time—AI fixes its own mistakes without waiting for human review

The Catch

Slower initial setup than “just code it”. Requires discipline to follow the process rather than rushing to implementation. You still need architectural expertise to validate plans—AI can structure the planning, but it can’t replace experience knowing which approaches work at scale.

The TDD approach with AI self-validation is powerful but requires active management of test bloat. You need human judgment to distinguish between thorough testing and redundant testing. We’ve found that roughly 40-60 per cent of AI-generated tests can be removed without reducing meaningful coverage. The time saved in not running redundant tests outweighs the time spent reviewing and pruning the test suite.

Most importantly: tests are documentation for future maintainers. Overwhelming test suites make the codebase harder to understand, not easier. Aim for tests that communicate intent clearly, not tests that exhaustively validate every possible input combination.

7. Query Cost Optimisation: Data Warehouse Cost Reduction with AI

The Problem

Data warehouse costs scale faster than you expect. Peak season puts unexpected load on systems. You have no visibility into what’s driving costs until the bill arrives. Optimisation requires specialised expertise that’s expensive to hire.

What We Monitor

We analyse query performance patterns using the same Claude-based approach from section 2, but focused specifically on cost reduction. By reviewing EXPLAIN plans from Snowflake or BigQuery, we identify expensive operations and recommend alternatives. We track cost per query type and look for warehouse-specific optimisation opportunities.

One retail client’s Snowflake costs jumped 140 per cent month-over-month in October as they prepared for Black Friday. We analysed their query patterns and found that historical reporting queries were running on their largest warehouse size. Moving those queries to a smaller warehouse during off-peak hours reduced their monthly bill by £8,000 with zero impact on performance.

Client Impact

- Proactive data warehouse cost reduction rather than reactive cost cutting

- Better capacity planning for peak season loads

- Reduced surprise bills when usage spikes

- Clear ROI on optimisation efforts

The Catch

You need baseline metrics first. Not all optimisations are worth the developer time—some queries are cheap enough that optimising them costs more than leaving them alone. Balance cost reduction against engineering capacity.

Conclusion: What Actually Matters

AI is a multiplier, not magic. Every AI tool for data analytics requires human expertise to deploy effectively. Expect roughly 50 per cent of AI-generated output to be directly usable—the other half needs significant review and refinement. The value comes from faster time-to-insight, not from replacing people.

The hard part isn’t the AI. It’s knowing which problems to solve. When should you optimise query performance versus accept the cost? Which attribution model matters for your business model? What tracking gaps will actually affect decisions? As Harvard Business Review notes in their research on data-driven decision making, interpreting data correctly matters more than having the data itself.

These eight tools solve real bottlenecks: attribution confidence, warehouse costs, decision speed, code quality. They’re most valuable during peak season and rapid growth phases when time pressure is highest. The ROI comes from faster decisions and fewer costly mistakes, not from headcount reduction.

For your business: Which of these resonates with your current pain points? Where are you losing time, money, or confidence today? What would 50 per cent faster analytics delivery be worth during your peak season? That’s the conversation worth having now – and your choices for production AI tools will be downstream from that. Have fun!