Every summer summit at Tasman includes a hackathon—a chance to step out of our day-to-day work, learn something new, and collaborate with colleagues we don’t normally work with. This year, we succumbed to the draw of AI and settled on creating an app that could take natural language queries, convert them to SQL, extract data, and create visualisations. Essentially, we tried to automate what we do for clients every day.

The slightly uncomfortable question lurking beneath? How soon could we be replaced by AI?

But what started as a hackathon experiment has evolved into a broader exploration of how AI agents are fundamentally changing the way we build and deliver analytics capabilities. Here’s what we learnt—and where the industry is heading.

The Original Vision: Analytics at the Speed of Thought

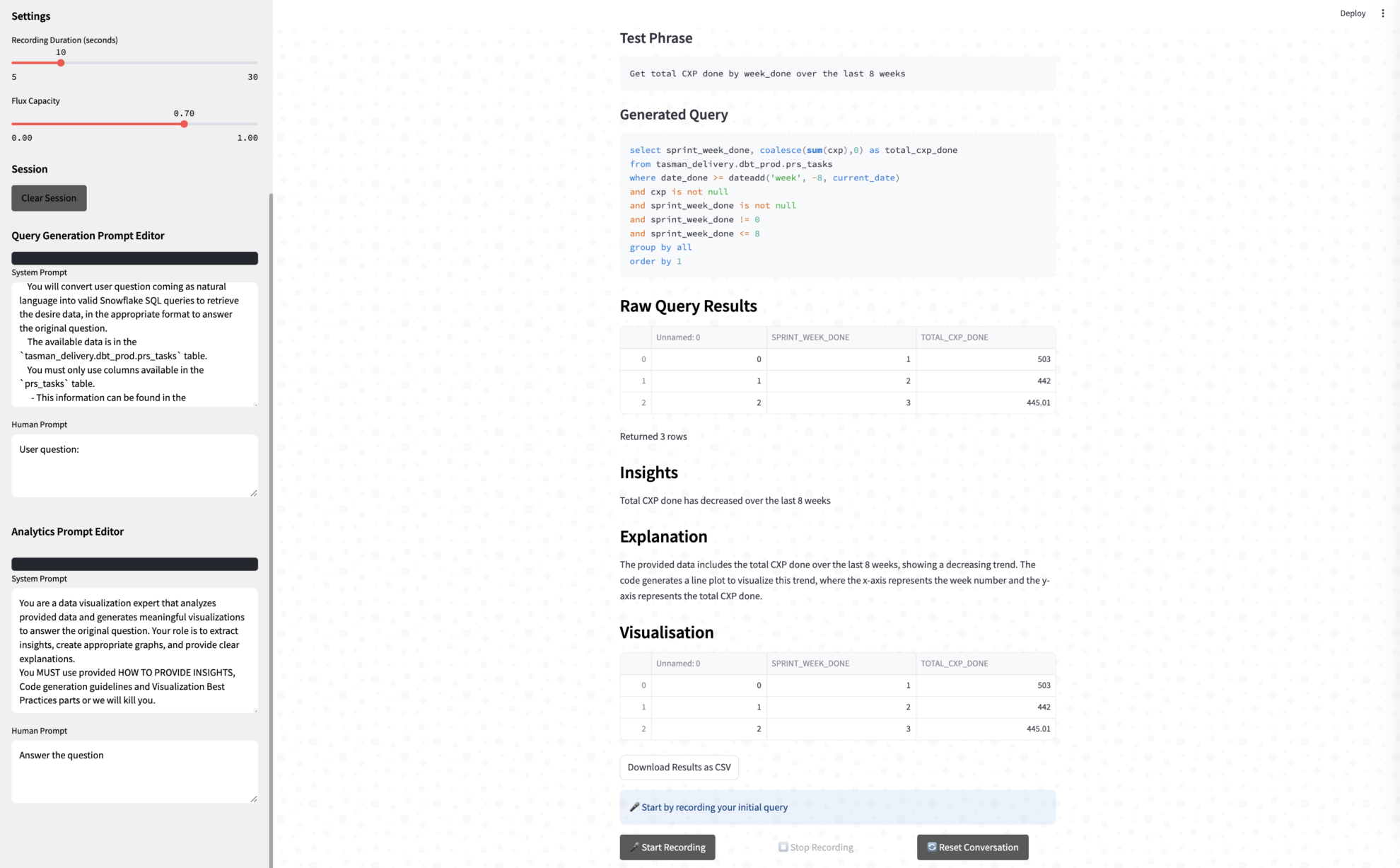

Our hackathon goal was ambitious: build an AI-driven app that goes from voice input → natural language → SQL → data extraction → visual insights. For added complexity, we wanted voice control to drive the entire experience.

The crucial constraint? We wanted everyone involved—from our Head of Operations to Marketing to the Executive team. This meant the project had to be accessible to people with varying technical backgrounds. At its best, this is exactly what AI promises: breaking down technical barriers so anyone can contribute meaningful work.

The Team Structure

We divided the company into five teams, each owning a critical component:

- Voice Input – Building a UI and implementing speech-to-text using OpenAI’s Whisper

- Input Validation – Creating guardrails against dangerous SQL (DROP statements), inappropriate questions, and irrelevant queries

- Hypothesis to SQL – Converting natural language to SQL using project context, dbt documentation, and metadata

- Query Evaluation – Assessing SQL quality, validity, and accuracy—with feedback loops to improve poor queries

- Visualisation & Insights – Automatically selecting appropriate charts and generating analytical commentary

Each team worked in dedicated Python notebooks (familiar technology for most of the company) that imported shared utilities. The magic? A shared state object that enforced data contracts between modules. This meant teams could innovate independently whilst maintaining clean interfaces.

The Technical Scaffolding

Preparation proved crucial. Before the day:

- We created a working scaffolded app with established interfaces

- Each team received reading materials for baseline knowledge

- We set up notebooks with clear input/output contracts

- Teams had full flexibility to experiment with different models, prompts, and approaches

The orchestration was handled through LangChain, with teams free to choose between different OpenAI models (GPT-4, GPT-4-mini, and others) based on their specific needs.

The Day Itself: Sugar, Caffeine, and Collective Problem-Solving

With only four hours of development time (we’d had other sessions earlier), the scaffolded approach proved invaluable. Teams got running quickly using the same tooling we use on client projects.

The office filled with the familiar sounds of a hackathon—groans of frustration when AI hallucinated Snowflake syntax, triumphant cheers when modules finally produced clean output. Each team customised their AI module, experimenting with different prompting strategies and model configurations.

The demo session revealed both successes and limitations:

✅ Simple queries worked brilliantly. Questions like “How many complexity points did we deliver weekly in 2025?” returned accurate results.

✅ Complex queries exposed weaknesses. Multi-variate visualisations and sophisticated SQL joins proved challenging.

✅ Voice input exceeded expectations. Whisper performed remarkably well, even in our noisy environment with compressed audio.

✅ Cost was negligible. The entire experiment cost $2.38 in API credits.

Key Learnings: What Actually Works in Practice

Several patterns emerged that have shaped our thinking about AI in analytics:

1. Tight Scope Beats Broad Ambition

Modules with focused responsibilities outperformed those trying to do too much. Splitting visualisation and insights into separate LLM calls yielded better results than combining them.

2. Examples Trump Constraints

Providing concrete input/output examples in prompts worked far better than describing constraints. Positive examples (“here’s what good looks like”) outperformed negative ones (“don’t do this”).

3. State Management Matters

Our shared state object proved invaluable. It allowed:

- All modules to access upstream data and prompts

- Easy mocking of inputs for testing

- Clear debugging when things went wrong

- Seamless integration during the final demo

4. Model Selection Is Context-Dependent

Larger models (GPT-4) handled complex tasks better, whilst smaller models (GPT-4-mini) were perfectly adequate for simple transformations. The key was matching model capability to task complexity.

Beyond the Prototype: The Agentic Analytics Revolution

Since our hackathon, we’ve been exploring how tools like Claude Code, Cursor, and other agentic frameworks are moving from experiments to production workflows. Here’s how they’re transforming analytics development:

The Text-to-SQL Evolution

Modern agents have moved far beyond simple query translation:

- Semantic understanding: Agents now grasp table relationships, business logic, and metric definitions

- Iterative refinement: With human feedback, agents learn organisation-specific patterns and conventions

- Context awareness: Integration with dbt documentation and metadata layers provides crucial business context

Our hackathon taught us that the best results come from agents that understand not just SQL syntax, but the semantic meaning of your data model.

SQL-to-Data: Where Agents Excel

Once you have SQL, execution and result handling become critical. Modern frameworks shine here:

- Intelligent query planning: Agents recognise when to use materialised views or cached results

- Error recovery: When queries fail, agents suggest fixes based on error patterns and historical solutions

- Progressive enhancement: For large datasets, agents implement sampling strategies and progressive loading

We found that pairing Snowflake with simple Python orchestration worked brilliantly. Agents could generate both the SQL and the Python code to process results seamlessly.

The Human-Agent Partnership

Tools like Claude Code and Cursor represent a fundamental shift in how we develop analytics:

Claude Code excels at:

- Generating complete data pipelines from high-level descriptions

- Refactoring complex transformations whilst maintaining business logic

- Creating documentation that actually explains the “why” not just the “what”

- Suggesting optimisations based on query patterns and performance metrics

Cursor and similar IDEs add:

- Real-time code completion with business context awareness

- Inline explanations that help junior analysts understand complex logic

- Automated test generation that covers edge cases humans might miss

- Version-aware refactoring that respects existing dependencies

The crucial insight? These tools amplify human capabilities rather than replacing them. The business context, strategic thinking, and stakeholder understanding remain uniquely human contributions.

The Technical Stack That Actually Delivers

Through extensive experimentation, we’ve refined our recommended stack:

Data Layer:

- Snowflake or BigQuery for the warehouse (both work equally well)

- dbt for transformations, testing, and documentation

- Semantic layer that agents can query for business definitions

Agent Layer:

- Claude API or OpenAI API for core LLM capabilities

- Simple Python for orchestration (resist over-engineering)

- LangChain only when complex workflows require it

- State management using simple objects with clear contracts

Interface Layer:

- Streamlit for rapid prototyping

- React for production applications

- Voice/chat interfaces where they add genuine value

This stack costs virtually nothing to experiment with and scales gracefully as usage grows.

Where MCP Fits: Bridging the Context Gap

Model Context Protocol (MCP) represents the next evolution in agent-tool integration. Rather than building custom connectors for each system, MCP provides:

- Standardised context sharing between agents and your entire analytics stack

- Persistent memory that maintains context across sessions

- Tool discovery so agents automatically know available capabilities

- Security boundaries that respect data governance policies

For analytics teams, MCP could enable agents that understand your complete ecosystem—from raw data sources to business KPIs to stakeholder preferences—without custom integration code.

Practical Next Steps: From Experiment to Production

Based on our learnings, here’s how analytics teams can start leveraging AI agents today:

1. Start with High-Volume, Low-Complexity Tasks

- Ad-hoc queries that follow patterns

- Standard report generation

- Data quality checks

- Documentation updates

2. Build Feedback Loops Early

- Capture successful queries as examples

- Track accuracy metrics

- Create improvement cycles

- Involve end users from day one

3. Invest in Foundations

- Clean, well-documented data models pay exponential dividends

- Semantic layers help agents understand context

- Example libraries teach agents your patterns

- Clear data contracts enable modular development

4. Measure Real Impact

- Time saved on repetitive tasks

- Reduction in query errors

- Faster insight delivery

- Improved documentation coverage

The Reality Check

Our hackathon revealed both the promise and current limitations of AI in analytics:

Where AI agents excel today:

- Simple to medium-complexity SQL generation

- Pattern recognition and anomaly detection

- Documentation and code explanation

- Routine report generation

Where humans remain essential:

- Understanding business context and strategy

- Stakeholder management and communication

- Complex multi-step analyses

- Ethical and privacy considerations

The Bottom Line

Our hackathon proved that AI-powered analytics isn’t just feasible—it’s already here. But the winning approach isn’t full automation. It’s intelligent augmentation that combines AI’s processing power with human judgment and business understanding.

The tools exist. The APIs are affordable. The potential is enormous.

For data teams, the question isn’t whether to adopt AI agents, but how quickly you can integrate them into your workflow whilst maintaining the quality and trust your stakeholders expect.

The future of analytics isn’t about replacing analysts with AI. It’s about analysts with AI superpowers delivering insights at the speed of business.

Interested in exploring AI-augmented analytics for your organisation? Tasman helps ambitious companies build modern data capabilities that amplify their teams’ impact. Get in touch to learn more.